The first scientific mnemonic I can remember is King Philip Came Over For Good Spaghetti: Kingdom, Phylum, Class, Order, Family, Genus, Species. That was a long time ago, and biological classification now seems very different (as far as I can tell from Wikipedia), though it’s unclear to me whether cladistics wasn’t the standard back then, or whether it wasn’t taught in the introductory material that came over with King Philip. Still, it remains true that binomial nomenclature is the standard for the most basic unit of biological classification: species.

When you are learning the basics, you usually don’t stop to question them, at least not very deeply, because if you don’t start by accepting most of what you hear, you won’t ever learn enough to really question everything you’re told. But even back then, the idea that Homo sapiens stood uniquely alone in the classification of all living things seemed very questionable. Humans are considered a monotypic species, which means that the species is the sole member of the rank above it, the genus. I remember thinking that it made sense to group together a lion (Panthera leo), tiger (Panthera tigris), jaguar (Panthera onca), and leopard (Panthera pardus). But why was Homo sapiens all alone? Does that really seem likely to be true, now and forever?

There are other monotypic species; some are even singular through multiple ranks. The aardvark (Orycteropus afer) is the only member of its genus, which is the only genus in its family, making it the loneliest mammal on the planet. There’s a monotypic species of fish (Ozichthys albimaculosus) and butterfly (Eucheira socialis), and several monotypic plants. The hyacinth macaw (Anodorhynchus hyacinthinus) was once thought to be monotypic, until a compatriot (Anodorhynchus leari) was correctly identified over a hundred years after it was discovered and originally misclassified. In all of these cases, it’s easy to understand the isolation of the species as a function of either specific adaptations to an available habitat, or as isolation imposed by a habitat that has become unavailable. That is, the aardvark’s habitat is not rare, but its evolutionary adaptations are so specific that its singularity doesn’t seem strange – what’s strange is eating termites, but termites can be found in a lot of places. On the other hand, the Madrone butterfly exists only where madrone trees exist, in high elevations in Mexico – the geographic specificity of the habitat explains the singularity of the species.

The singularity of Homo sapiens is much harder to accept. Human adaptation is so generally applicable that we can thrive in any habitat. As we’ve expanded across the Earth, no isolation of habitat has yet cut us off from further access to evolutionary development; indeed, the opposite is true: we’ve expanded into every habitat and we live in increasingly interconnected ways. There’s no obvious explanation for the absence of other human species. The idea that we stand alone seems like a category error.

Prior to the modern understanding of evolution, biologists theorized that a missing link existed between humans and apes. At one time, humans were thought to be the only “hominids,” and all other apes were called “pongids” – many people sought a missing link between the two, but it was never found because we had misclassified the relationship between hominids and pongids. In the modern understanding, humans and apes are in the same family – turns out, we’re all hominids. So the search for a missing link is now thought to be a fool’s errand.

In this essay, I am that fool. I speculate that the links that are missing aren’t between humans and other apes, but among humans themselves. I propose that Homo sapiens has already evolved into separate species, and possibly we were never a monotypic species for very long.

This is a claim so outlandish that I can only compare it to Copernicus, who examined the complicated orbits of Ptolemaic star charts and realized the absurdity of putting humans in the center of the picture. If you insist that the Sun revolves around the Earth, you need ungainly mathematical gymnastics to work out the orbits of the other planets. It’s a lot more simple to adopt a frame of reference where the Earth revolves around the Sun.

That simple shift in framing was the beginning of a scientific revolution that defines how we live to this day. What I can outline today lacks such impact only because of my inability to specify all that is required in a single essay. However, I aim to provide notes that might inspire a modern-day Aristarchus of Samos, who theorized that the Earth revolves around the Sun, while being utterly without the knowledge or data to prove it.

Aristarchus lived more than three centuries before Ptolemy, and Copernicus lived another millennium and a half after that. We’re only a century and a half after Darwin, but knowledge moves faster these days. Have we properly applied what we’ve learned about evolution to the case of Homo sapiens? A frame of reference that makes us apparently above the laws of evolution must be regarded as inherently suspect.

Are Humans Above Evolution?

One way to ease into this inquiry is to ask whether human evolution has stopped. We don’t have to ask this question, and it seems outlandish to bother to try, but let’s ask anyway. The average duration of a species is 1.2 million years, as far as we can tell from the geological record, and Homo sapiens has been around for only about 200 thousand years, or maybe half a million at most. Isn’t it several hundred thousand years too early to consider this question?

We only estimate the lifespan of species that leave a geological record, some trace of their time on Earth buried deep within its layers. We have no way of knowing about the existence of species that left no record. The shorter the lifespan of a species, the less likely it is that there was enough time and development to leave a geological record. So it’s entirely possible that the average lifespan of species is much shorter than we have been able to measure. Nevertheless, we can sharpen this question by saying that humans now are nearly certain to leave a geological record that will be readable far into the future. Maybe we can assume that the average duration of any species that leaves a readable geological record is over a million years, so therefore we can assume that our species will last a lot longer than we have so far.

But this math-based objection probably isn’t why many of us believe that human evolution isn’t occurring. A stronger reason is just that we find it difficult to conceive of our evolution, because we have dominated the Earth. Other species evolve due to changes in habitat and environment, but our species is defined by its mastery over habitat. We might not survive changes to our environment, but if we do, it will be through either mastery over the environment itself, or due to human-developed adaptations to environmental change. If the planet warms, the ice melts, the oceans rise, and the atmosphere allows in unprecedented radiation – in any scenario where we don’t all die, some will adapt. Will that adaptation be considered human speciation? If some of us learn to live underground, and subsequently develop improved ability to see in the dark, will that be speciation? If some of us are enhanced with bionic lungs, artificial gills, and metallic skin thick enough to repel radiation, will that be speciation?

The traditional definition of species is that members of a common species can generally produce fertile offspring through sexual reproduction. There may be exceptions within the group, but these exceptions are not popular characteristics of the group. For the sake of brevity, I’ll use the term “can mate” to mean “can generally produce fertile offspring through sexual reproduction.” The term mating is inexact and arguably overbroad, but it’s better than typing four times the number of words necessary every time. So: members of the same species can mate. If different types of animals cannot mate, then they are not of the same species.

Consider again a scenario where the environment has changed so much that some humans choose to live underground, others remain above ground. Assume that after a very long period of time passes, the underground-dwellers can see much better in the dark than the aboveground folk. Are these two groups of humans now different species? The usual analysis would conclude that as long as members of the two groups could still mate, we should say no.

What about a situation where one set of humans develop a revolutionary treatment that inserts rare elements into their skin, at a molecular level involving genetic editing, so that no amount of sunlight will harm them? Are they still human? We might still say no, assuming they can still mate with people who don’t get the molecular treatment, but the editing of genetic material will give many of us pause. Is an integration of non-organic technology with human life enough to create a new species?

In either situation, we probably are inclined to wait before rendering judgment. The longer we wait after the change (i.e. living underground, artificial skin), the likelier it is that morphological changes will evolve that absolutely preclude the possibility of mating. In fact, the usual practice of professional taxonomists is to only identify a species after such changes have occurred and can be mapped to phylogenetic markers. The state of the art of biological classification today demands that we be able to see the boundaries between species in their DNA sequences.

Remember though, that today’s state of the art is tomorrow’s obsolete mistake. In the case of humans, stopping the analysis at DNA sequences is a very strange thing to do, since we do not currently know the relationship between patterns of mind and any genetic marker, and yet many scientists suspect a relationship will eventually be proven. Why should we let our own ignorance be the boundary to speciation? How can we analyze differentiation within a species without looking at the most critical features that actually distinguish them as a unique species? It’s like trying to distinguish fish without looking at their gills.

What defines Homo sapiens as a unique species is the product of our minds. We can argue about exactly which products are crucial from an evolutionary standpoint – language or emotion or consciousness or whatever – but there is no question that the evolutionary prospects of our species have always been entirely dependent on the products of our minds. Once we have acknowledged this completely uncontroversial fact, why would we insist that human speciation must be defined by features that are entirely unrelated to the features that make us human? Human speciation must be defined by something that is happening in our minds.

It may seem unscientific to suggest that mere mental activity can be a boundary for species. Genetic material, whether or not you’ve ever seen a strand of DNA, seems more real than thoughts. We’ve seen pictures and diagrams, we know this is an actual object of science. Who has ever laid eyes on a thought? But remember how we got here: DNA sequencing replaced rougher methods of measurement as the preferred tool for biological classification; we updated our techniques because the science advanced. In earlier days, biologists made many mistakes in classification by relying only on visual features – in humans, this has had disastrous results in eugenics and racism. Phylogenetic analysis, for which DNA sequences are the key texts, is vastly superior to prior methods. But it’s not the end of the story.

Most biologists reject mind-body dualism, which is the idea that the sense of self constructed in the mind occurs entirely separate from all material aspects of the body. Almost no scientist believes that a human can have a thought without some observable activity in the brain. Broadly speaking, all of neuroscience is devoted to identifying the biological properties of mental activities. We aren’t very close to being able to match habitual patterns of thinking to heritable genetic markers, but closing that gap seems like a very realistic possibility. As we understand more about how the processes of the mind manifest in matter, we may end up discarding DNA sequencing altogether, just as we abandoned Darwin’s mistaken theory of pangenesis. Or we might better understand what the genetic markers tell us as we learn more about how patterns of thoughts are observable in biology.

Assume that one day, we will find the biological markers of thought patterns, and that these markers are heritable (i.e. transmissible from one generation to the next). The real question here is whether differences in minds are so profound that they can prevent mating, with such prevention being meaningful enough to describe separate species.

The Veil of Limited Perfection

Let’s consider this question with a thought experiment, where we consider the world as viewed through a “Veil of Limited Perfection” – assume all of the people in the thought experiment are exactly the same people as in our world, except that all problems of safety, health, and economics are solved. (Make no assumptions about how the problems were solved! This world is no more likely to be dominated by socialism than capitalism, for example.) No other problems are solved; in particular, sexual reproduction is still the only way for humans to procreate, and our mating rituals and characteristics remain largely the same. No one ever experiences unwanted fear, no one ever dies an unnatural death, and everyone has as much financial resource as they want – but you still have to figure out who to date. In such a world, can different species of humans ever evolve?

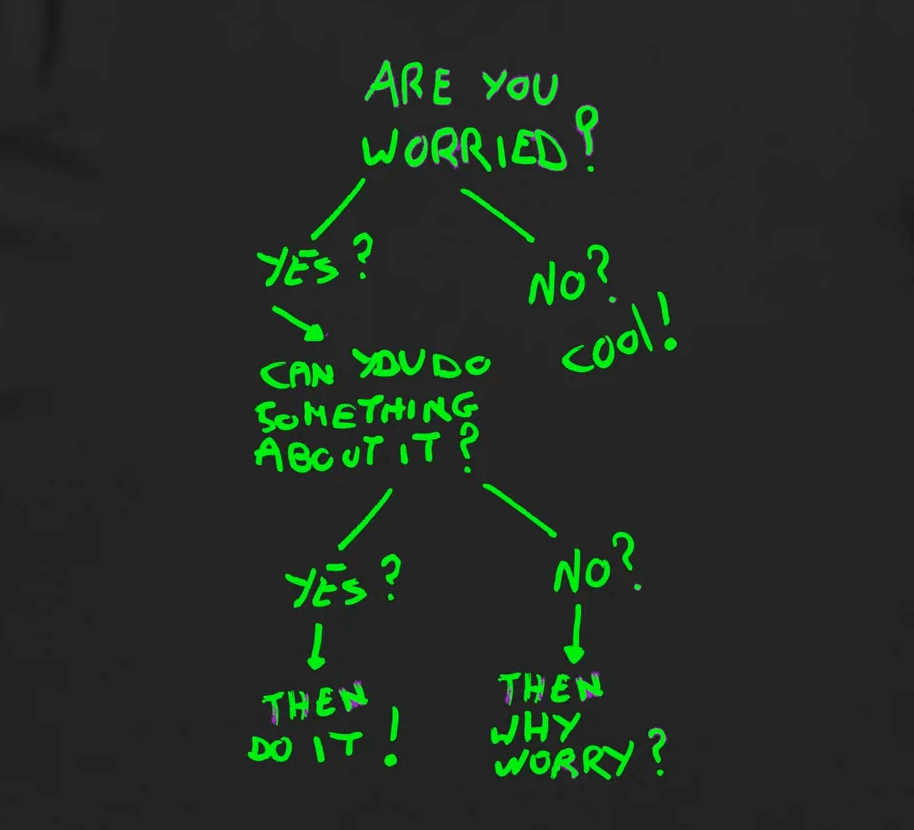

Would every single human being be capable of mating with any other? You could say “Yes, in theory.” You have to say “in theory” to account for the fact that you know that no human would happily mate with a completely random selection of any other human on the planet, even though in this thought experiment, that would not affect their safety, health, or wealth. Every single human would continue to have mating preferences, and these preferences would of course not be formed entirely on physical features. Instead, preferences would be largely if not entirely about the products of minds of prospective mates. Are they happy, kind, generous? Are they courageous, resilient, or honest? Do they like the same music, movies, books? Do they like sex the way you like it? All of these questions, and their answers, exist in the minds of prospective mates. Beyond the Veil of Limited Perfection, it’s clear that survival of the fittest is a process determined entirely by products of our minds.

Note also that this world would not be more perfect if we could wish away our preferences. That would mean removing all differences in opinion, temperament, and intellect. It would mean a world without variation in art, or music, or drama or comedy. People do not enjoy all expressions of these equally, and we tend to enjoy other people who enjoy things that are complementary to what we enjoy.

So beyond the Veil of Limited Perfection, each person has a set of people that they would willingly mate with. Looking at the preferences of all people, you can construct sets that include only people who would all be willing to mate with any member of the same set. You could call that a “mutual intra-mating preference group” – but this is a cumbersome name, so for now let’s use “phyloculture” instead. This term risks considerable confusion, since it implies that culture evolves through evolutionary processes, and it’s not yet clear that culture is what we’re talking about here. But if we need a term to describe what is shared between people who enjoy a related set of opinions, temperament, art and music – what better term is there than culture?

Since a phyloculture is defined as “mutual intra-mating preference group,” can we say that different phylocultures are in fact different species? Why not, if by definition no human would choose to mate outside their own phyloculture?

A simple objection is: “But people can still choose to mate outside their phyloculture, can’t they?” No: if they are willing to mate with each other, then by definition they are in the same phyloculture.

The harder form of this objection asks how consent can possibly be considered a barrier in whether animals can generally produce fertile offspring through sexual reproduction. But this objection has already been addressed: in humans, we must look for speciation in the features that define us as humans; as these features are within our minds, we must look at the products of our minds to identify the distinguishing barriers between species (since we do not yet have the capability of genetically identifying the material processes within our minds). The reason that we do not consider consent as a question in the mating of animals is not that consent is irrelevant, but that animals are not capable of consent. (Of course, some argue that animals are capable of consent, but that has no bearing on whether humans have speciated.)

Now, take off the Veil of Limited Perfection. Do you have a set of people that you would willingly mate with? Of course you do. If you knew the same kind of information about everyone on the planet that you know about your set, would your set include everyone? Of course not. The fact is, you already have a mutual intra-mating preference group. You just can’t see it, because it’s distorted by considerations of safety, health, and wealth.

Phylocultures exist today, but they are hidden by social phenomena. Remarkably, many of those phenomena have decreasing importance to species survival over time. In the early days of Homo sapiens, the species could not survive simple threats to safety or health. As we developed increasingly sophisticated social structures, economic considerations also greatly affected human survival. But nearly all humans alive today have considerably better prospects for matters of basic survival than humans of a thousand years ago. Another way of saying this is: Human speciation has already occurred, you just didn’t notice because it was hidden by earlier survival needs.

Finally, I can reveal that I decided to use the term “culture” despite possible confusion because I’m adding a dubious corollary to this theory of human speciation: As cultures evolve, they will tend to evolve into phylocultures, or they will disappear. In the future, there will be no cultures other than phylocultures.

Cultural Evolution in an Interconnected World

Cultural evolution is a field with an ugly history of controversy, as it is closely aligned with repugnant ideas about race and nationalism, and eugenics and genocide. We should take seriously the possibility that the ideas here can similarly be distorted by supporters of repugnant ideologies. These matters may deserve a separate follow up essay to address all concerns in detail, but for now, suffice to say that I categorically reject racism, nationalism, eugenics, and genocide. I’m a crazy amateur political philosopher, but I’m not a monster; just because the latter overlaps significantly with the former, that obviously doesn’t mean that the former all sympathize with the latter.

The study of cultural evolution routinely assumes that culture is transmitted through social means. The newer subfield of biocultural evolution posits that an interplay of genetic and social factors result in the evolution of cultures. One of the more common objections to the idea of cultural evolution is the assertion that evolution only acts on an individual level, sometimes even going so far as to say that only genes evolve, not people. Biocultural evolution has a great answer to this: individual genetic evolution has emergent properties that are only interpretable at a group level. As an over-simplified example: if some set of genes contributes to musical talent, and if a particular culture values musical talent (including in mating), then cultural reinforcement of the value of music will favor the continued advantage of those genes in a virtuous cycle.

There are of course cultural traits that have value between groups, not just within them. Put a warlike culture next to one that is not, in circumstances where war is common and resources are scarce, and the warlike culture has a group advantage that has evolutionary impact on the other group. However, cultural advantages rise and fall much more rapidly than natural habitats. If warlike culture were always an advantage, presumably we would all be Spartans.

And this is the first key to understanding how cultures will evolve into more visible phylocultures. When considering the advantages of traits that are expressed by the body, the background timetable is provided by changes in habitat, which occur over epochs. For advantages of traits expressed by the mind, the background timetable is provided by changes in culture, which evolve much faster than habitat, and faster still as time goes on. As far as we can tell, the culture of every type of early human was relatively static for millennia. In the Common Era, cultural change usually occurred over centuries. But in the last century, cultures changed by the decade, and in this decade, many of us have experienced cultural change just in the past year. So human speciation, properly understood, is happening faster than ever. That doesn’t mean the acceleration will continue, but it does mean that there might be more to analyze about human speciation from the last few decades than there has been in all the human history prior to that.

In prehistoric times, Homo sapiens coexisted with other hominins (including interbreeding, by the way, and yet we still view these as separate species). We may have had similar cultures, but we had very separate geographies. Then as the human population grew to cover the Earth, and finally we developed the technology for a very high degree of interconnection, there was a point in the 20th Century where we talked about a “monoculture” because we were so many and so connected that it seemed like a concentration of media power would drive a single dominant culture.

And then the Internet happened. In the glory days after the turn of the millennium, we crowed about the disaggregation of media and the disintermediation of corporate gatekeepers. Microcontent and microtargeting at first seemed to mean thousands of different cultures were possible. But that was an illusion. The reality is, concentration of media power has reassembled, in only a slightly different configuration. You can see it if you look for it: reconfiguration and consolidation of online cultures is happening now, very rapidly. And online culture increasingly forms and reflects offline culture. The importance of geography, nationality, race, and even religion in forming cultural boundaries has diminished. People are more united now by thoughts, opinions, and tastes that are relatively free of those old boundaries, and getting more free all the time. As this process continues, the observable features of phylocultures will become more and more prominent.

Where Does This End?

It never ends. Evolution never ends … or does it? (Or were you just asking, when is this incredibly long essay going to end?)

I don’t know. I have a theory, and since that theory builds upon this one, it is even crazier than the notions here. But I’ve already obliquely revealed the beginnings of an outline in a prior essay, which is actually my first statement of three phylocultures that I believe exist today. I believe that properly structured research would show credible material supporting the existence of at least three human species today. It would take a lot of work over many years to properly design and conduct this research, and frankly I’m not qualified for the task. However, as a closing note and a stake in the ground, I’ll assert the first proposed binomial nomenclature for these ostensible species: Homo fidelus (The Culture of Belief), Homo humanitas (The Culture of Humanity), Homo cognitio (The Culture of Knowledge).

I’m no Linnaeus, I’m certainly no Copernicus, and I only hope to inspire an Aristarchus. But if you think I’m crazy, you’re probably a different species than I am.